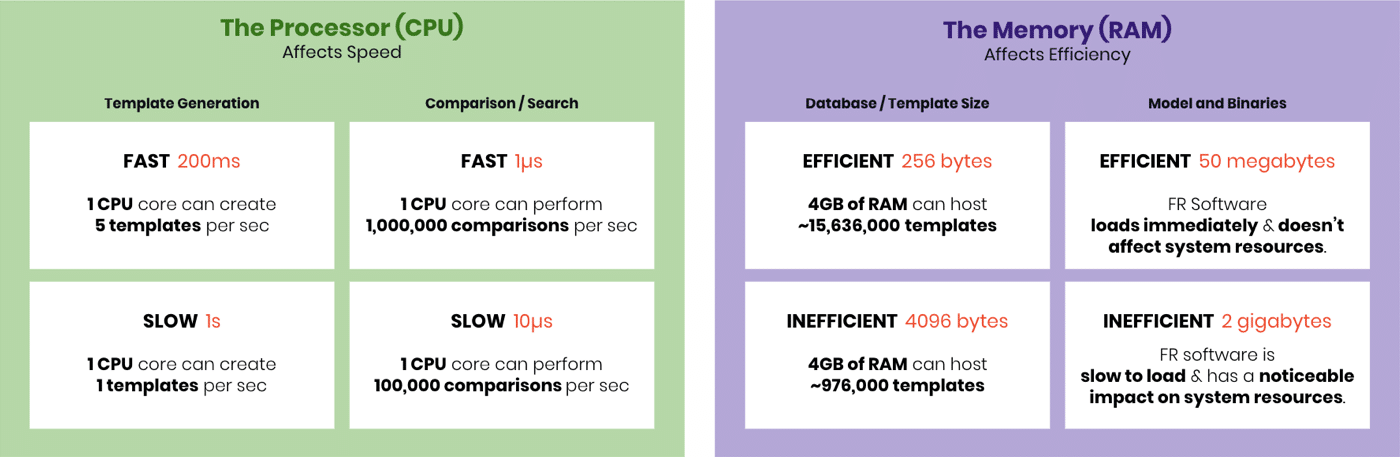

- Template generation speed is the time needed to initially process a face image or video frame.

- Template size is the memory required to represent facial features of a processed face image.

- Comparison speed is the time needed to measure the similarity between two facial templates.

- Binary size is the amount of memory needed to load an algorithm’s model files and software libraries.

The performance of an FR algorithm across these metrics will dictate whether or not they can run on a given hardware system. And, across the FR industry there is a tremendous amount of variation in efficiency metrics across different vendors. The following graphic demonstrates how different metrics can influence the amount of CPU throughput or memory needed for a hardware system:

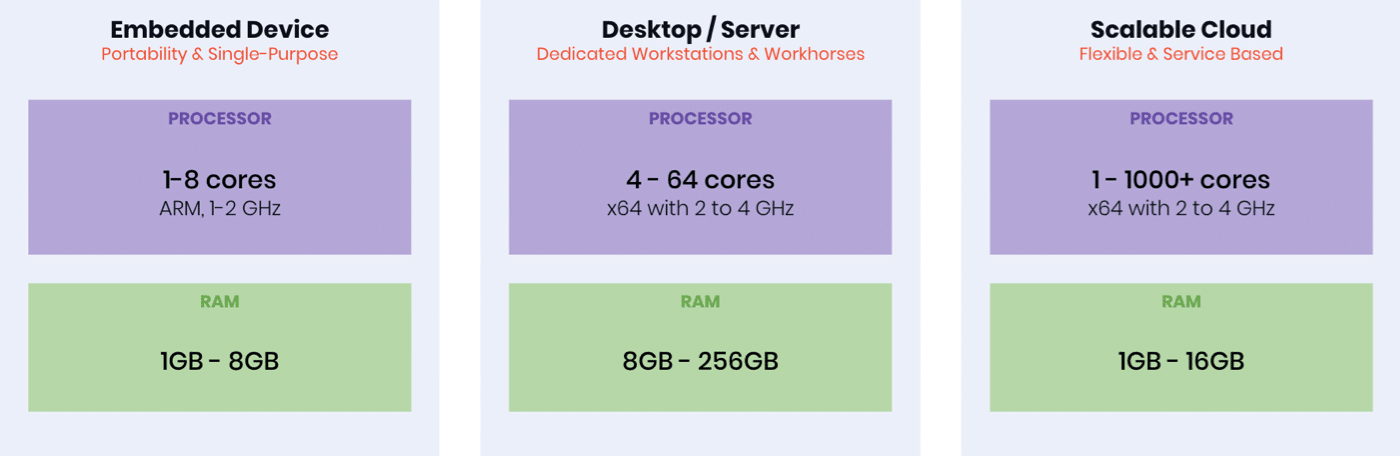

- Persistent server / desktop – low quantity, high cost, high processing power and memory. These systems will typically host FR libraries and/or system software. These systems will typically have server grade x64 processors and potentially GPU processors.

- Embedded device – low-cost, high quantity devices with limited processing power and memory that can either host FR libraries on-edge or operate as a “thin-client” that passes imagery to a server or cloud system for processing. These systems typically have mobile grade ARM processors and potentially Neural Processing Units (NPU’s).

- Scalable cloud – arrays of server resources abstracted through a cloud resource management system.

- Network – communication channels between devices. Networks will have varying amounts of bandwidth depending on their properties.

Depending on the application and available hardware resources, different FR system architectures need to be deployed. And, depending on the architecture used, different FR algorithm efficiency requirements will emerge. This is because of the differences in processing and memory resources across these different hardware systems:

Advantages

- Hardware flexibility

- Predictable cost

- Predictable throughput

- High throughput

Disadvantages

- Hardware cost

- Lack of redundancy

- Lack of scalability

- Lack of portability

Algorithm limitations when using a persistent server:

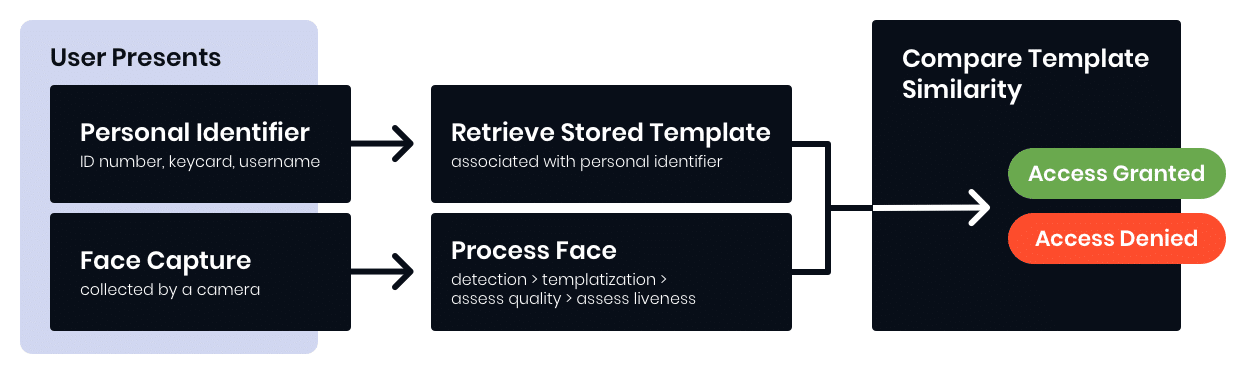

Identity Verification – 1:1

- Slow template generation speed will reduce throughput/system response time

- Large binary size will impact system restart speed

- High hardware cost

- Powerful network needed for decentralized sensors

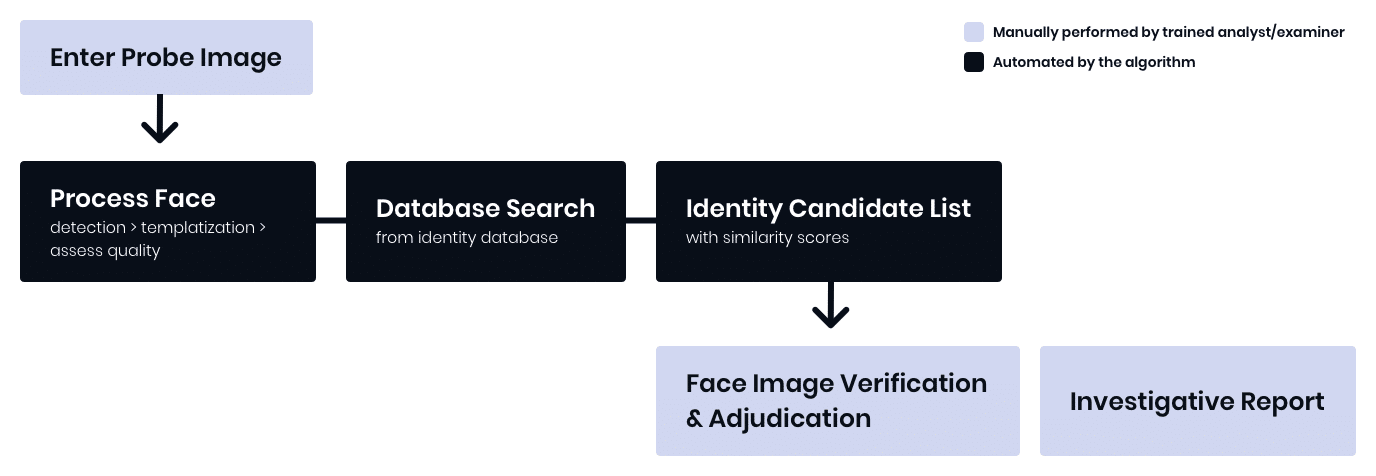

Manual Identification – 1:N

- Large template size will require significant memory resources

- High template generation speed will delay search results

- High comparison speed will delay search results

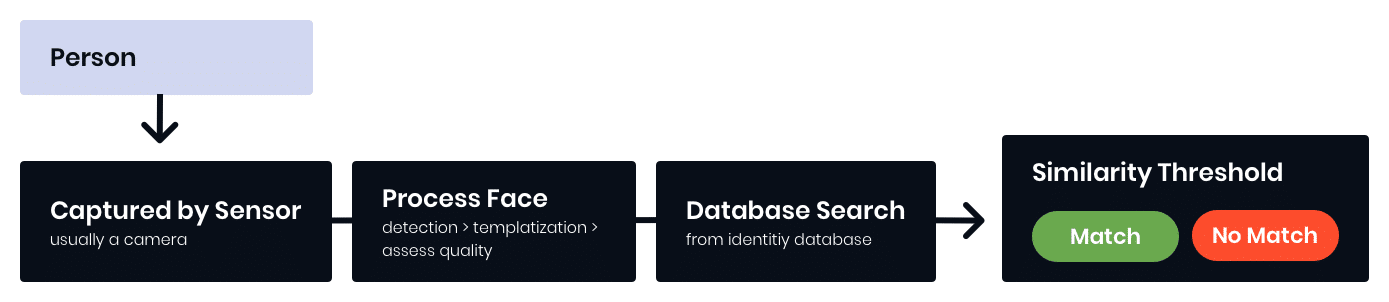

Manual Identification – 1:N+1

- High template generation speed will reduce throughput (e.g., video processing)

- Large template and binary sizes will require significant memory resources

Advantages

- Low hardware cost

- Portability

Disadvantages

- Limited hardware capacity

- Limited power resources

- Requires highly efficient algorithms

Algorithm limitations per FR application when using embedded devices

Identity Verification – 1:1

- Slow template generation speed will cause major latency (> 3 seconds)

- Large binary size will occupy a high percentage of available memory

Manual Identification – 1:N

- Template size must be very small due to memory limits

- High template generation speed will significantly delay search results

- High comparison speed will significantly delay search results

- Large binary size will occupy a high percentage of available memory

Manual Identification – 1:N+1

- Slow template generation speed will render video processing impossible

- Template size must be very small due to memory limits

- Large binary sizes will exasperate memory resources

Advantages

- Highly scalable

- Pay per usage

- Redundancy

- Fault tolerance

Disadvantages

- Latency to instantiate new nodes

- Memory limitations

- Higher cost to initially implement

Algorithm limitations per FR application when using the cloud

Identity Verification – 1:1

- Large binary size will slow container instantiation time

- Poor network bandwidth will delay image transmission

- Slow template generation speed will reduce throughput / system response time

Manual Identification – 1:N

- NOT ADVISED TYPICALLY

- Large template size or large number of templates will make container instantiation very slow; as such, not typically advised

- Gallery size is typically too large to instantiate containers in less than 30 seconds

Manual Identification – 1:N+1

- Slow template generation speed makes video processing expensive

- Large template size, large number of templates, and/or large binary size will make container instantiation very slow

- Poor network bandwidth will prevent video transmission to the cloud