Unlike fingerprint or iris recognition, facial appearances are public. In turn, facial images have become widely available – trillions of them scattered across the Internet, plus the endless hours of faces embedded in video streaming services. A nearly endless source of facial imagery exists in every corner of the digital world. Even relative to other non-biometric computer vision or machine learning classification tasks, the availability of facial appearance data vastly exceeds data available to train other classification algorithms. In many ways, due to the overwhelming legacy of widely distributed facial imagery, it’s likely that facial recognition will continue down this path to become the most accurate machine learning technology in the world.

In this article, we’ll explore classic considerations for facial biometric traits and discuss how they align with modern advances and use cases. Through this examination, we have identified the following “pros” and “cons” of face recognition technology:

Pros. | Cons. |

| Highly Accurate | Challenges with Familial Similarity |

| Convenient | Challenges with Cosmetic Modification |

| Default Method for Humans | Risks of Spoofing |

Accuracy

Though historically face recognition has been the least accurate of the “Big Three” biometrics (facial appearance, fingerprint ridge patterns, and iris texture), in recent years face recognition technology capabilities have surged to become the world’s most accurate biometric technology.

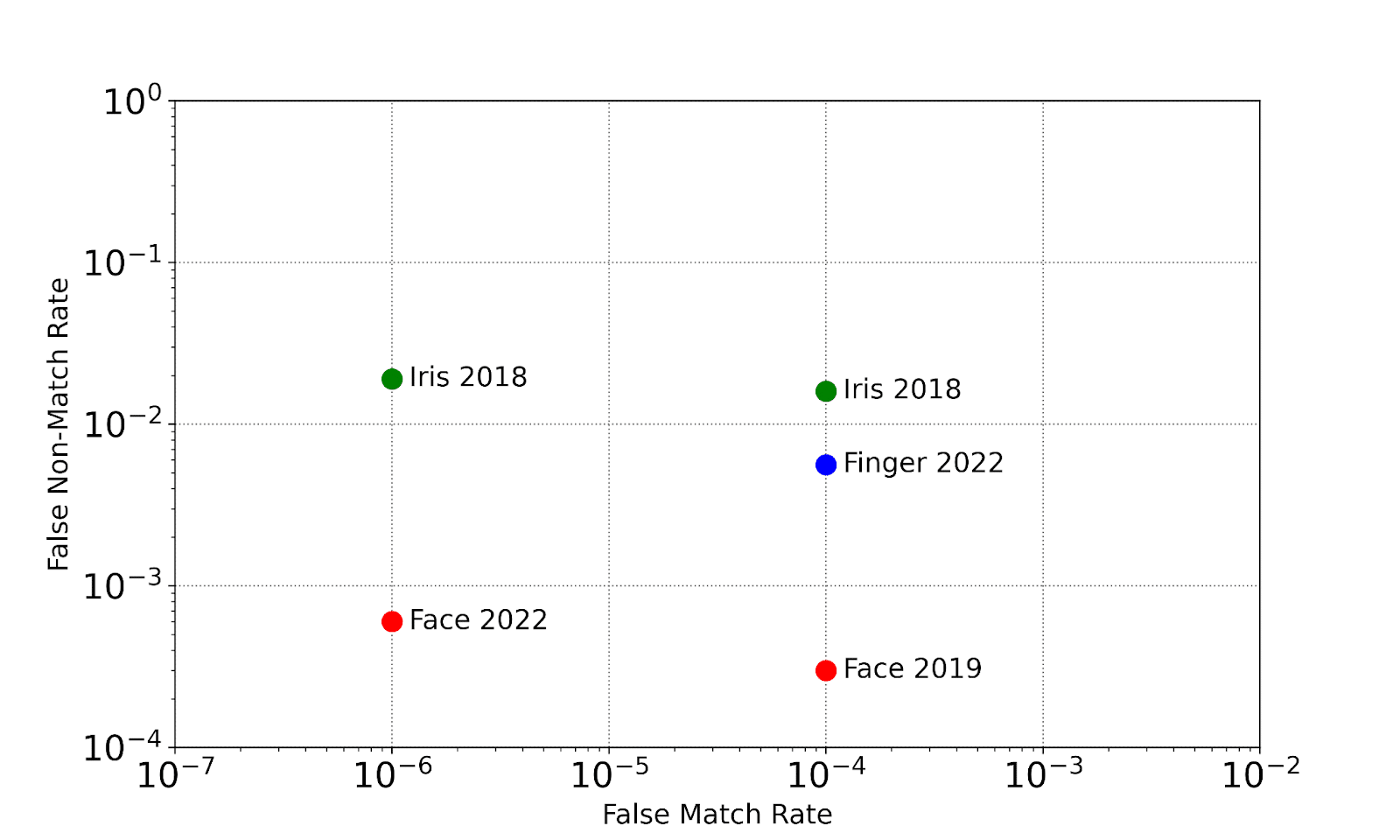

Comparing Biometric Accuracy (Face, Fingerprint, Iris)

Sources:

Face: NIST FRVT Ongoing, Oct 2022 – Visa Dataset

Iris: NIST IREX IX report, April 2018 – Table D1, Single Eye – NOTE: the current NIST IREX Ongoing does not include 1:1 measurements

Fingerprint: NIST PFT, Oct 2022 – MINEX III Dataset, Single Finger

While these error rates are not a perfect apples-to-apples comparison, error rates produced by face recognition algorithms are significantly lower than those measured by any single finger or iris sample.

Fingerprint has long since been considered the gold-standard for biometric accuracy (outside of invasive methods like DNA), so many are often surprised to hear that face recognition error rates are often substantially lower.

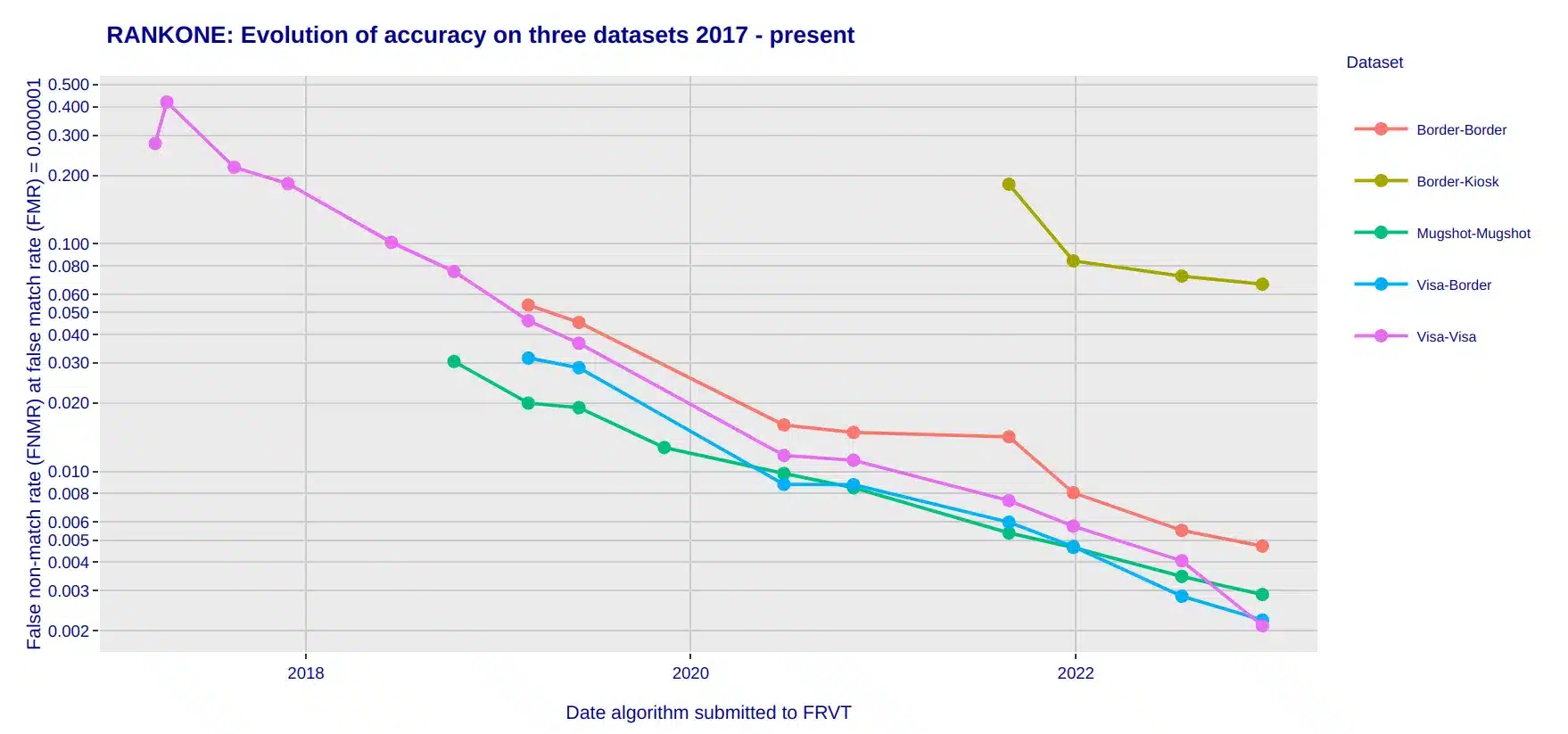

A closer look at the last five years of accuracy testing reveals an exponential decrease in error rates.

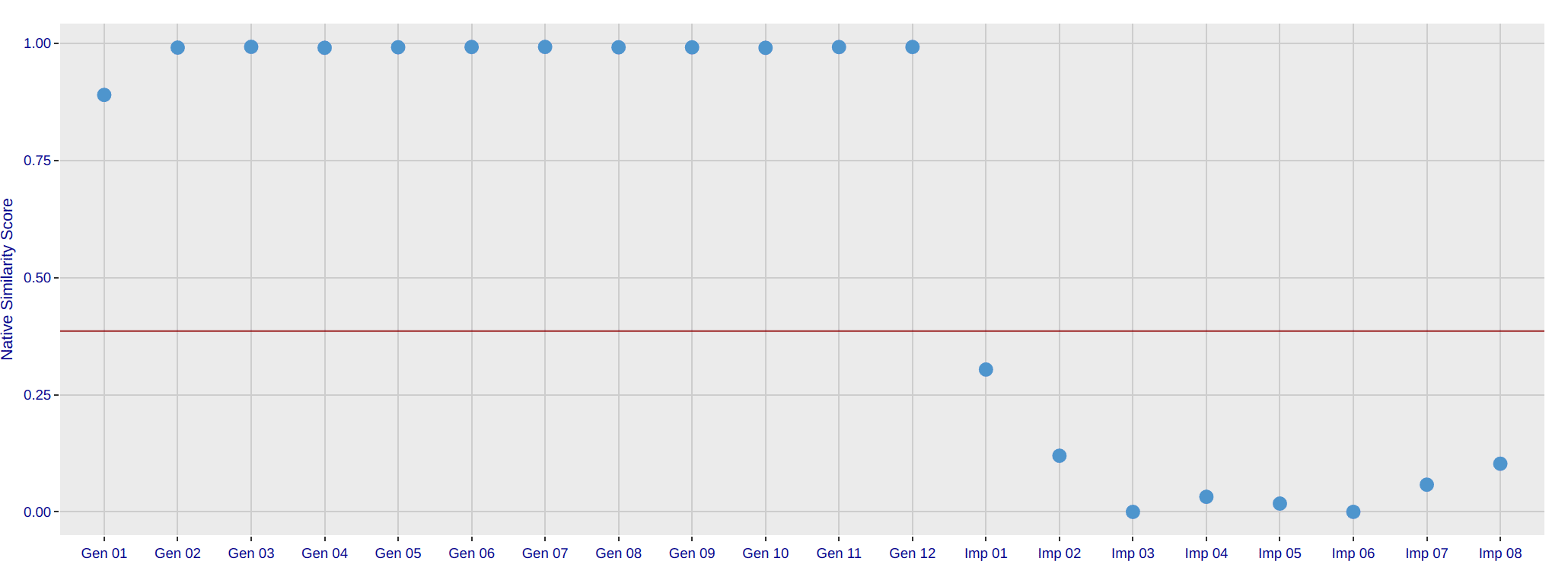

Evolution of ROC.ai Face Recognition Accuracy, 2017 – 2023

Over the past 5 years, when operating at a False Match Rate of 1 in 1 Million, the False

Non-Match Rate (FNMR) error has decreased by over 50x.

Source: https://pages.NIST.gov/frvt/reportcards/11/rankone_014.html

How did facial recognition become so accurate?

Public biometric

The exponential accuracy progression of face recognition technology is largely due to the following two factors:

- Facial appearance has been the primary biometric trait throughout civilized human existence; and

- Facial appearance is not private.

In terms of the privacy of facial imagery, historically speaking, our facial appearance is the single least private piece of information about ourselves. When meeting a stranger, people may exhibit reluctance to provide simple information such as their name. Yet, they will provide their facial appearance immediately to countless strangers in day-to-day interactions. When in a public setting, the right to privacy of facial appearance simply does not and cannot reasonably exist. In fact, in some of the most liberal countries on the planet (e.g,. France, Denmark) it is illegal for a person to conceal their face in public.

A tangible example of our lack of facial privacy in public settings can be seen when attending professional and/or televised sporting events. People pay hefty sums of money to attend games, knowing that their facial appearance may be broadcast on TV to audiences of millions.

Facial Privacy in Public Settings Examples

In Fan Example 2, a fan was stunned after his football team lost a game in the final seconds. The fan was briefly aired on TV, but it was enough for Internet memesters to turn the image into a meme that went viral. The fan never consented to his image being widely distributed across the Internet, but at the same time he had no recourse to prevent this distribution.

In both cases, the fans never consented to be broadcast on national television, or to further be spread online. Nor does any other fan when shown in the background of such televised events. Regardless, the lack of inherent privacy to one’s facial appearance means there is no recourse to prevent sharing facial appearance – aside from not appearing in public.

Though precedent does not protect privacy of facial appearance, privacy concerns still exist regarding facial appearance and facial recognition. In fact, emerging technologies like highly-accurate automated facial recognition can create significant privacy issues due to the highly public nature of facial appearance.

Deep Learning and Convolutional Neural Networks

In the last decade, a technological revolution rapidly advanced the world of computer vision and machine learning. Inspired by the human visual processing system, we call this technology, deep learning via convolutional neural networks. This technique applies highly-tuned kernel convolutions against matrices of image pixels to yield powerful feature representations.

The number of parameters in these models usually falls in the order of hundreds of thousands to hundreds of millions. To learn the optimal model parameters requires yet another order of magnitude more imagery than the number of parameters, as dictated by the “rule of 10” phenomenon in machine learning.

When provided with sufficient data, knowledge about the classification problem domain, machine learning methods, and GPU/supercomputing hardware, algorithm models can be trained that deliver truly stunning accuracy. In some cases, such as face recognition, these models can significantly surpass human performance.

NIST Face Recognition Algorithm Matching Accuracy

Face recognition has benefited tremendously from the combination of expansive data and incredibly powerful deep learning toolkits, and we expect error rates should continue to decline for years to come.

However, as discussed previously, face recognition will also struggle to cross some hard boundaries. Eventually the “Moore’s law”-like effect achieved by face recognition algorithms these past 5 years may taper out.

Convenience

In addition to accuracy, another chief benefit of face recognition technology is convenience.

Using a face recognition system typically requires little effort. When paired with continuous authentication and real-time screening, they are often completely frictionless. When used as a method of access control, face recognition often requires less user effort and cooperation than fingerprint or iris recognition.

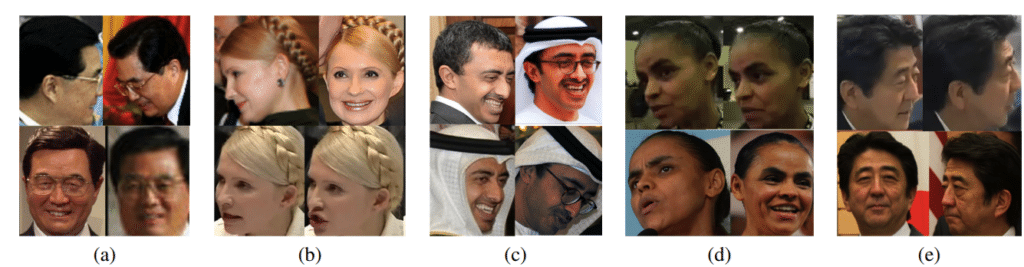

Due to such convenience, face recognition is the only primary biometric trait that can be used successfully in a fully unconstrained manner. Indeed, accuracies on highly unconstrained benchmarks, like IARPA Janus, have gone from extremely low a decade ago, to now approaching the accuracy of other biometric traits operating in fully cooperative settings in a relatively short period of time.

Examples of Unconstrained Face Images in the IARPA Janus Dataset

Default Method for Humans

The most common method humans use to identify another person in day-to-day life is face recognition. Human face perception is such an important task – we have a large, dedicated region of the brain called the fusiform face area whose sole function is human face identification.

It is natural for automated systems to prioritize compatibility with our manual, legacy methods. For this reason – inherent human understanding – face recognition is often preferable to other biometric traits.

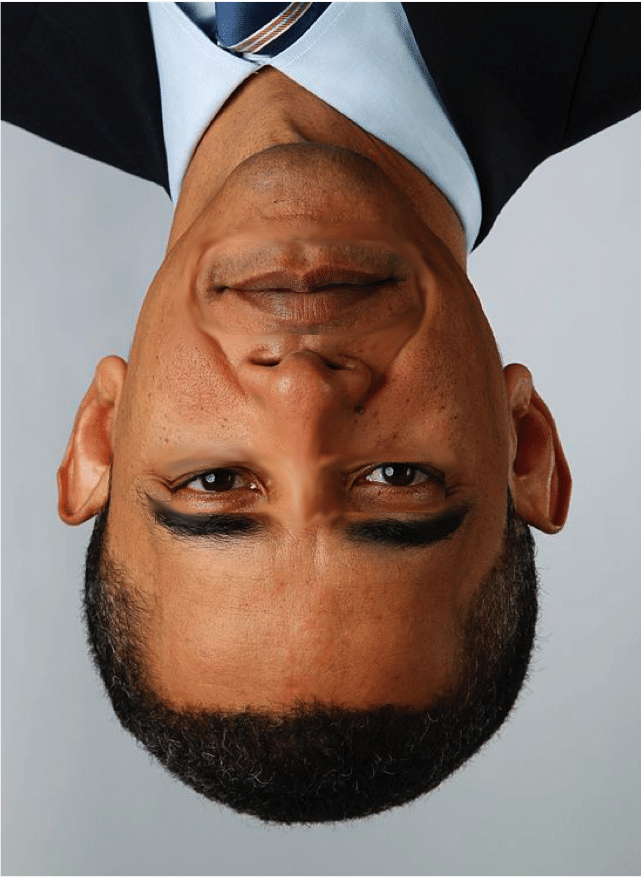

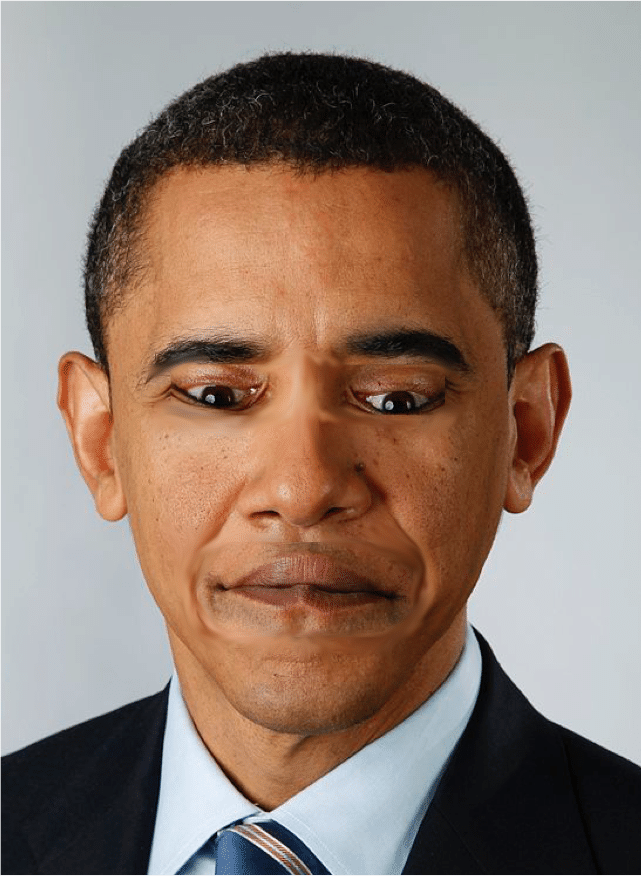

Perhaps there is no better example of the human dependence of facial recognition than the Thatcher effect:

Example of the Thatcher effect

One of the biggest reasons for society to continue investing in the use of properly developed automated face recognition technology is our inherent reliance on the facial biometric in our day-to-day lives.

Limits of Face Recognition

While face recognition technology continues to deliver unprecedented convenience and accuracy, we must also address some fundamental limitations and challenges.

Identical Twins

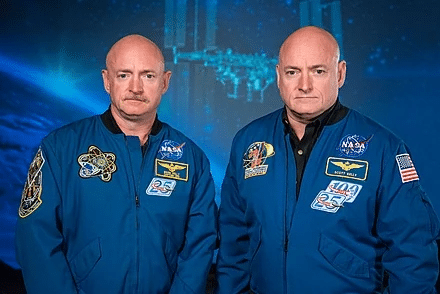

One of the more fundamental limits on face recognition is the challenge of identical twins and to a lesser extent familial relations. Though only accounting for roughly 0.3% of the population, identical twins in particular create a substantial challenge for automated face recognition algorithms. The reason is fairly obvious and illustrated by the following examples of pairs of identical twins:

There are a few algorithmic approaches that can be applied for identical twins. One option could be a focus on Level III facial features like freckles and moles, which are indeed unique between identical twins.

A more realistic approach for operating facial recognition technology in the presence of identical twins could entail administrative declaration of twin status. Knowing this information in advance allows for improved differentiation between twins while treating the rest of the population normally.

To a lesser extent, familial relationships also produce higher degrees of facial similarity, due to shared genetics. Even the “gold standard” of identification – DNA – faces similar limitations with twins and familial relationships.

While facial appearance and DNA are driven by genetics, fingerprint friction ridge patterns and iris texture are not. Instead, they are formed environmentally (e.g., fingerprints are formed in the womb). As a result, fingerprint and iris algorithms do not share the same limitations with twins and relatives.

Facial Spoofing

One of the biggest strengths for face recognition technology is the amount of publicly available data, which enables development of highly accurate algorithms – but this strength is a double-edged sword.

The abundance of facial imagery on LinkedIn, Facebook, company websites, yearbook photos, and a wide range of other sources, creates substantial risk for identity fraud. Acquiring copies of face photos is exceptionally easy.

As we learned from Beyonce’s 2016 Super Bowl appearance, it’s essentially impossible to remove a photo from the Internet. Because facial appearance is our single most public piece of information, it means that there will be no solution in the form of removing facial images from everywhere they exist.

Instead, to protect individual identities, significant effort must be invested in the development of spoof detection algorithms (also referred to as “liveness” checks or “presentation attack detection” algorithms).

Liveness checks are critical when face recognition is used for ID-verification through a mobile app or unattended customer service kiosks. As the accuracy of face recognition algorithms continues to increase at exponential rates, these unattended access control applications grow increasingly common. For other use cases, such as forensic identification or identity validation when a human operator is present (e.g., at a border crossing), liveness checks lack relevance.

While fingerprint and iris biometric modalities can also be spoofed, acquiring samples to use in an attack is far more difficult, due to their private nature.

Decorative Cosmetics and Cosmetic Surgery

While facial appearance is largely determined by genetics, facial appearance can also transform through the use of makeup, hormones, and cosmetic surgeries.

Instead of cosmetics, early facial recognition research often focused on overt traits like facial hair. Over time, however, it has become increasingly clear that while factors like facial hair present a fairly insignificant challenge to facial recognition accuracy, cosmetics create more difficulty than originally understood.

The fact that cultural use of cosmetics is predominantly by female populations has in turn resulted in minor, but statistically significant, differences in facial recognition accuracy between males and females. While some may attribute the difference in accuracy between genders to some inherent bias in facial recognition algorithms, it is becoming increasingly clear that the decrease in accuracy is instead due to the latent factor of cosmetics use by females.

Single Trait

While we have 10 fingers and two eyes, we only have one face. Thus, while it is more challenging to collect and manage samples from multiple fingers or irises, we can improve accuracy using this technique. With face recognition, this opportunity does not exist.

Ground Truth Issues

Another challenge with face recognition technology is that authoritative databases often lack pristine identity labels. Whether due to fraud, human operator error, or other factors, prominent government databases all seem to have identity labels errors. These ground truth errors are not inherent for facial biometrics algorithms themselves, but instead stem from the long-term legacy use of facial imagery in databases prior to automated face recognition.

Ground truth labeling errors can typically only cause an algorithm to measure higher error rates than actually exist (i.e., perform worse), so the error rates we capture in quantitative evaluations generally represent the upper bound for actual error rates on our algorithms. A portion of what’s measured as algorithm errors actually reflects data labeling errors. In other words, face recognition algorithms are more accurate than most benchmarks indicate.

Historically difficult to identify, these legacy errors in identity databases can reduce effectiveness of facial recognition algorithms. While ground truth errors are not easy to discover, automated face recognition algorithms have become astonishingly good at flagging potential errors for human review. However, this approach requires dedicated de-duplication processes.

Identity labels errors present significant challenges across all biometric traits, though fingerprint and iris present more unique challenges than compared to faces. While it may be easy to find ground truth errors in face recognition databases, finding them in fingerprint or iris databases is much more difficult. For potential facial identity error, the human brain is highly adept at comparing facial appearance making it easy to flag such inconsistencies when encountered. For fingerprint recognition and iris recognition, identifying errors is far more difficult, time-consuming, and expensive, often requiring expert analysis.

Perhaps no method can reduce the incidence of ground truth errors more than the incorporation of multi-biometric modalities. Though many face systems support use cases where fingerprints are available, the use of both face and fingerprint biometrics significantly reduces the chances for fraud to exist in identity databases.

Summary

In the last two decades, the capabilities of automated face recognition technologies have undergone a stunning transformation. Once considered too inaccurate for use as a primary biometric trait, facial recognition is now the single most accurate biometric technology in the world. These exponential improvements will not likely slow anytime soon.

At the same time, every new technology comes with limitations. For facial recognition, that primarily includes identical twins and identity spoofing.

The other two primary biometric traits –fingerprint and iris– also have advantages and limitations.

All factors considered, given the extreme ubiquity of facial appearance in our daily lives, and the astonishing accuracy of modern face recognition algorithms, minimal drawbacks exist for the technology.

Most importantly, fusing face recognition with another mature biometric technology like fingerprint recognition can create a multi-modal biometric solution for nearly impervious unattended biometric identification.

Multi-modal biometrics are indeed the holy grail of identification. All biometric traits come with tradeoffs and weaknesses, but in scenarios where two or more disjoint traits can be measured together, the incident of failure or fraudulent access becomes exceptionally low.

This article is a summary of a presentation provided at the National Institution of Standards (NIST) International Face Performance Conference (IFPC) on November 16th, 2022.

Put ROC multimodal biometrics to work for your business

Discover how our combination of face and fingerprint recognition can provide unparalleled accuracy for your identity verification and access control needs. Reach out now to upgrade your systems and safeguard your communities with only the best in multimodal biometric tech.